Wolfram Hardt, Professor at Chemnitz University of Technology gives insight into high-performance image processing for adaptive unmanned micro air vehicles (MAVs)

Unmanned micro air vehicles (MAV) are used by professional pilots for a huge range of industrial applications, for example, when inspecting buildings and infrastructure. For collision avoidance and flight control, MAVs are equipped with sensors of varied types. The number and type of sensors are limited by size and weight restrictions. But high-performance applications as autonomous or adaptive MAV missions require intelligent real-time sensors for:

- Classification of the actual flight context;

- Determination of the actual mission status and;

- Mission-dependent MAV actions.

The typical sensor paradigm of the quantization of some physical, measurable phenomes as light, speed or distance is much to restricted for this kind of complex sensing applications.

The computer engineering group at the Chemnitz University of Technology has developed a new approach for this kind of sensing application, based on real-time image processing. An additional, free movable camera is added to the equipment of the MAV. Weight, interfaces and costs can be determined exactly beforehand. With high- performance image processing in place, we implement various feature point detection algorithms to classify the actual flight context, to determine the mission status and to fire mission dependent MAV actions.

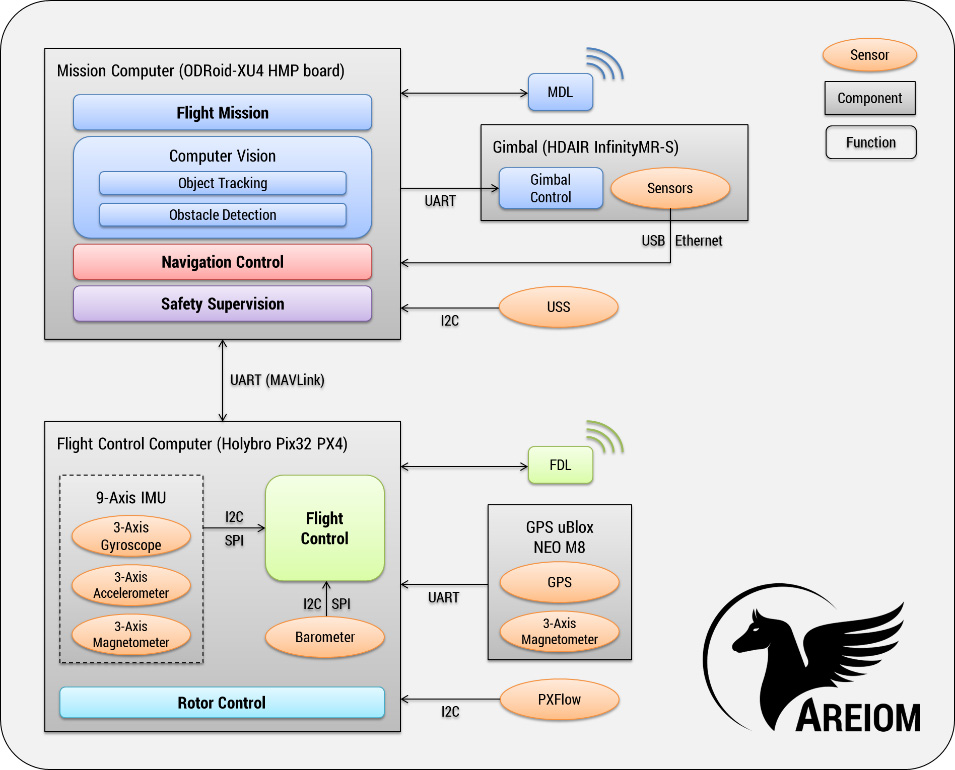

The implementation is based on the Areiom platform, which defines five separated architecture levels for specific MAV tasks, especially three control levels for handling the rotors, the fight parameters and the navigation functions are distinguished. Additional architecture levels are introduced for safety supervision and the flight mission. Finally, sensors, gimbal and additional features tell us that the detection camera as an intelligent sensor can be connected by standard interfaces.

Standard functions are implemented on the named control layers. The newly introduced flight mission layer offers computation resources for adaptable flight missions. Based on adaptability, autonomous missions for applications of limited complexity are implemented successfully.

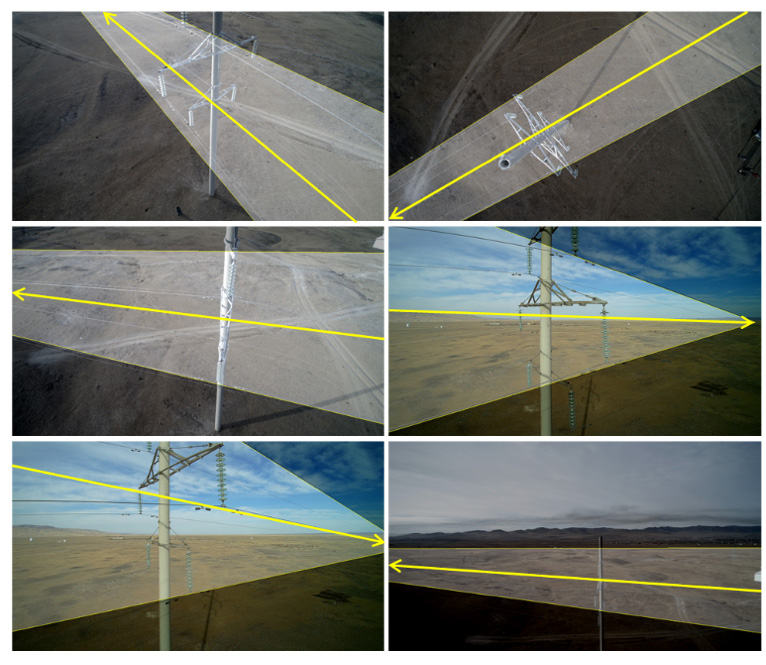

For the first class of applications, that is the inspection of industrial high voltage power lines and isolators, we implemented image-based sensing. While the MAV mission is active, we analysed a 1080p/30 fps video stream in real-time to determine the heading directions (HD). HD is important for sensing information needed by the MAV to follow the high voltage power line. Firstly, several filters as line detection, edge detection and Hough transformation are applied. Secondly, we compute the line intersection point. From this, the HD value is determined.

Our approach of image-based sensing is very flexible, can be easily adapted to a huge range of further feature points needed for adaptive MAV missions. Thus, a new level of sensing is introduced. Mechanical problems due to sensor mounting or weight restrictions are complexly eliminated. Once the MAV is equipped with the sensing camera, the setup is fixed. All feature point detection algorithms for sensing are implemented in software and mapped directly the Areiom platform.

Standard filter functions are implemented as a library, so the combination of filters and special feature point detection tasks are evaluated in our laboratory. Test flights for evaluation are performed in our indoor flight centre (IFC). This allows for the controlled evaluation of image-based sensing and adaptive MAV missions. With direct cameras, the MAV can be viewed from three different perspectives. This allows detailed flight and mission analysis.

For image-based sensing, we provide projections of original flight situations within the IFC. Thus, the flight context, the mission status and all MAV actions can be validated efficiently.

Our new approach implemented on the Areiom-platform enables adaptive MAV missions due to image-based sensing. By means of this promising technology, MAV can be used in a much more flexible, safe and efficient way in urban environments.

Please note: this is a commercial profile

Prof Dr Wolfram Hardt

Chemnitz University of Technology

Tel: +49 371 531 25550