Machine learning has already been widely accepted in the private sector, however, it is often feared in the public sector. Here, Simon Dennis, Director of AI & Analytics Innovation, SAS UK, explores the benefits of using machine learning in healthcare

Machine learning is one aspect of the AI portfolio of capability that has been with us in various forms for decades, so it’s hardly a product of science fiction. It’s widely used as a means of processing high volumes of customer data to provide a better service and hence increase profits.

Yet things become more complex when the technology is brought into the public sector, where many decisions can greatly affect our lives. AI is often feared, particularly around removing the human touch that could lead to unfair judgements or decisions that could cause injury, death or even the complete destruction of humanity. If we think about medical diagnoses or the unfair denial of welfare for a citizen, it’s apparent where the first two fears arise. Hollywood can take credit for the final scenario.

Whatever the fear, we shouldn’t throw the baby out with the bathwater. Local services in the UK face a £7.8 billion funding gap by 2025. With services already cut to the bone, central and local government organisations, along with the NHS, need new approaches and technologies to drive efficiency while also improving the service quality. Often this means collaboration between service providers, but collaboration between man and machine can also play a part.

Machine learning systems can help transform the public sector, driving better decisions through more accurate insights and streamlining service delivery through automation. What’s important, however, is that we don’t try to replace human judgement and creativity with machine efficiency – we need to combine them.

Augmenting, not replacing, human decisions

There’s a strong case to be made for greater adoption of machine learning across a diverse range of activities. The basic premise of machine learning is that a computer can derive a formula from looking at lots of historical data that enables the prediction of certain things the data describes. This formula is often termed an algorithm or a model. We use this algorithm with new data to make decisions for a specific task, or we use the additional insight that the algorithm provides to enrich our understanding and drive better decisions.

For example, machine learning can analyse patients’ interactions in the healthcare system and highlight which combinations of therapies in what sequence offer the highest success rates for patients; and maybe how this regime is different for different age ranges. When combined with some decisioning logic that incorporates resources (availability, effectiveness, budget, etc.) it’s possible to use the computers to model how scarce resources could be deployed with maximum efficiency to get the best-tailored regime for patients.

When we then automate some of this, machine learning can even identify areas for improvement in real-time and far faster than humans – and it can do so without bias, ulterior motives or fatigue-driven error. So, rather than being a threat, it should perhaps be viewed as a reinforcement for human effort in creating fairer and more consistent service delivery.

Machine learning is also an iterative process; as the machine is exposed to new data and information, it adapts through a continuous feedback loop, which in turn provides continuous improvement. As a result, it produces more reliable results over time and ever more finely tuned and improved decision-making. Ultimately, it’s a tool for driving better outcomes.

The full picture

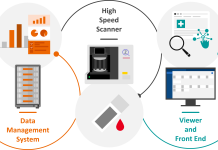

The opportunities for AI to enhance service delivery are many. Another example in healthcare is Computer Vision (another branch of AI), which is being used in cancer screening and diagnosis. We’re already at the stage where AI, trained from huge libraries of images of cancerous growths, is better at detecting cancer than human radiologists. This application of AI has numerous examples, such as work being done at Amsterdam UMC to increase the speed and accuracy of tumour evaluations.

But let’s not get this picture wrong. Here, the true value is in giving the clinician more accurate insight or a second opinion that informs their diagnosis and, ultimately, the patient’s final decision regarding treatment. A machine is there to do the legwork, but the human decision to start a programme for cancer treatment, remains with the humans.

Acting with this enhanced insight enables doctors to become more efficient as well as effective. Combining the results of CT scans with advanced genomics using analytics, the technology can assess how patients will respond to certain treatments. This means clinicians avoid the stress, side effects and cost of putting patients through procedures with limited efficacy, while reducing waiting times for those patients whose condition would respond well. Yet, full-scale automation could run the risk of creating a lot more VOMIT.

Risk of VOMIT

Victims Of Modern Imaging Technology (VOMIT) is a new phenomenon where a condition such as a malignant tumour is detected by imaging and thus at first glance it would seem wise to remove it. However, medical procedures to remove it carry a morbidity risk which may be greater than the risk the tumour presents during the patient’s likely lifespan. Here, ignorance could be bliss for the patient and doctors would examine the patient holistically, including mental health, emotional state, family support and many other factors that remain well beyond the grasp of AI to assimilate into an ethical decision.

All decisions like these have a direct impact on people’s health and wellbeing. With cancer, the faster and more accurate these decisions are, the better. However, whenever cost and effectiveness are combined there is an imperative for ethical judgement rather than financial arithmetic.

Building blocks

Healthcare is a rich seam for AI but its application is far wider. For instance, machine learning could also support policymakers in planning housebuilding and social housing allocation initiatives, where they could both reduce the time for the decision but also make it more robust. Using AI in infrastructural departments could allow road surface inspections to be continuously updated via cheap sensors or cameras in all council vehicles (or cloud-sourced in some way). The AI could not only optimise repair work (human or robot) but also potentially identify causes and determine where strengthened roadways would cost less in whole-life costs versus regular repairs.

In the US, government researchers are already using machine learning to help officials make quick and informed policy decisions on housing. Using analytics, they analyse the impact of housing programmes on millions of lower-income citizens, drilling down into factors such as quality of life, education, health and employment. This instantly generates insightful, accessible reports for the government officials making the decisions. Now they can enact policy decisions as soon as possible for the benefit of residents.

Ethical concerns

While some of the fears about AI are fanciful, there is a genuine concern about the ethical deployment of such technology. In our healthcare example, allocation of resources based on gender, sexuality, race or income wouldn’t be appropriate unless these specifically had an impact on the prescribed treatment or its potential side-effects. This is self-evident to a human, but a machine would need this to be explicitly defined otherwise. Logically, a machine would likely display bias to those groups whose historical data gave better resultant outcomes, thus perpetuating any human equality gap present in the training data

The recent review by the Committee on Standards in Public Life into AI and its ethical use by government and other public bodies concluded that there are “serious deficiencies” in regulation relating to the issue, although it stopped short of recommending the establishment of a new regulator.

SAS welcomed the review and contributed to it. We believe these concerns are best addressed proactively by organisations that use AI in a manner which is fair, accountable, transparent and explainable.

The review was chaired by crossbench peer Lord Jonathan Evans, who commented:

“Explaining AI decisions will be the key to accountability – but many have warned of the prevalence of ‘Black Box’ AI. However, our review found that explainable AI is a realistic and attainable goal for the public sector, so long as government and private companies prioritise public standards when designing and building AI systems.”

Today’s increased presence of machine learning should be viewed as complementary to human decision-making within the public sector. It’s an assistive tool that turns growing data volumes into positive outcomes for people, quickly and fairly. As the cost of computational power continues to fall, ever-increasing opportunities will emerge for machine learning to enhance public services and help transform lives.