Barb Hyman, CEO and Founder of Sapia.ai, explains the benefits of employing AI to assist in decision-making and mitigate human biases

Organisations increasingly use artificial intelligence (AI) tools as part of decision-making to improve efficiency, raise the quality of outcomes, and mitigate human biases.

A 2022 survey conducted by the Society of Human Resources Management (SHRM) found that very large organisations (5000+ employees) are leading the adoption: 42% are using AI and/or automation, and 79% of those who are using AI and/or automation used it in recruitment, making it the most common use case for AI in HR.

Increased efficiency is the top reason for using AI in recruitment (85%), followed by the ability to identify top candidates (44%) and reduce potential bias (30%). Government agencies facing similar challenges to large organisations can benefit from adopting AI tools in their people decisions.

The only proven way to interrupt human biases

Government agencies are, of course, entrusted with ensuring equal opportunities and fair treatment at a societal level by leading systemic changes to mitigate harmful human biases.

Most biases are unconscious. Unconscious bias training has not been effective against such biases, making the UK civil service abandon such training. Data and ethical AI are the only proven way to interrupt human bias in this area.

Inclusion starts with the hiring process. Many TA leaders default to the ‘human touch’ to attract, engage and win over talent. This can sideline candidates who identify with a disability at the top of the funnel, which accounts for 17.8% of the population. They are less likely to apply for a role that forces the candidate through an asynchronous video interview or a timed assessment.

Ethical AI tools, like Sapia.ai, can increase the diversity of the candidate pool at both the sourcing and application stages. A recent study by researchers in Australia and Sweden found that AI can improve gender diversity when hiring for a male-dominated technical role.

Using Sapia.ai’s Smart Interviewer as the underlying AI tool, the study found a 33% improvement in women’s interview completion rate when they were informed that their interviews were assessed by AI.

Would there be less bias when assessed by AI versus human reviewers?

Survey results suggest that the improved gender diversity was driven by females’ belief that there would be less bia when assessed by AI versus human reviewers. The study also found that human reviewers scored women lower when names were revealed. However, providing evaluators with AI-derived unbiased scores removed the gender gap, even when gender was known.

AI algorithms can be programmed to make objective assessments based on specific criteria, reducing the impact of human biases in the selection process. These models can be trained on diverse and representative data sets to ensure fair and inclusive outcomes, avoiding discrimination based on race, gender, age, or other protected characteristics.

These models can also be put under rigorous bias testing before deployment and monitored in real-time for adverse impacts once live.

AI can enhance efficiency and accuracy

Government agencies often receive many applications for job vacancies, which is time-consuming and resource-intensive to review manually. Ethical AI can streamline the hiring process by leveraging advances in natural language processing (NLP) and machine learning techniques to analyse and evaluate candidate data.

While AI-based automation can increase efficiency, it is important to consider the type of data used to avoid encoding biases and privacy concerns. For example, research studies and publicised industry experiments have shown how résumé data can lead to biased AI models, even after removing gender-indicating information.

Another benefit of AI systems is that they can continuously learn and improve over time. This iterative process ensures that the AI algorithms evolve to align with the evolving needs of government agencies and the changing dynamics of the job market.

Providing transparency and accountability in decision-making

Government agencies hold a unique responsibility to follow rigorous and transparent processes in their hiring decisions. The AI vendors they use must then demonstrate adequate levels of transparency to promote accountability and foster trust.

Sapia.ai has published our own FAIRTM framework (available at Sapia.ai) for building and applying AI models responsibly, including the best practice use of model cards and publishing our core research in peer-reviewed journals and conferences for transparency.

The critical ethical questions of AI

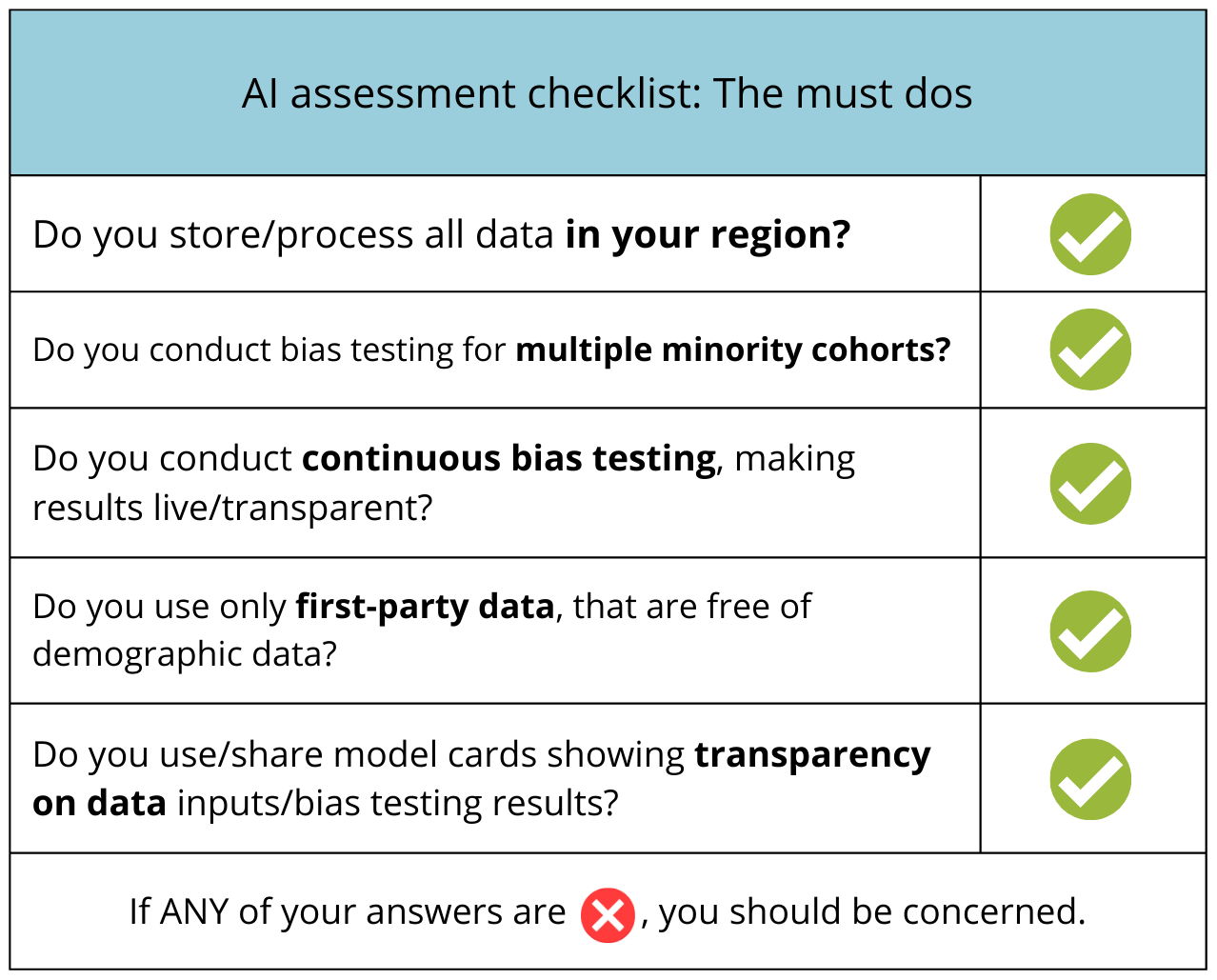

While AI can be invaluable in achieving efficiency, fairness, and quality of hiring, there are several questions a government agency should be asking before partnering with a vendor to mitigate human biases.

Sapia.ai has a proven history of helping government agencies improve hiring practices. We fully comply with relevant UK data privacy and security laws and are included in the G-Cloud 13 Framework. As a global leader in ethical AI for recruitment, we can help you successfully adopt a fair, transparent AI system.

Contributor Details

More About Stakeholder

-

Sapia.ai’s mission: Offering ethical AI to streamline recruitment processes

With its blind, automated chat interview and comprehensive DEI analytics platform, Sapia.ai’s technology is the first solution of its kind to disrupt biases that affect traditional recruitment processes – achieving candidate satisfaction rates of 90%.